The White House executive order on responsible AI development and the OMB draft guidance on managing agency adoption establish requirements for the Federal use of Artificial Intelligence technology.

As noted in the OMB draft guidance, in 2023, US federal agencies identified over 700 ways they use AI to advance their missions, and this number is only likely to grow as AI technology continues to develop. Some of the ways that AI is currently being used by US federal agencies include:

- Improving cybersecurity by identifying and responding to threats

- Detecting fraud and abuse

- Automating tasks to save time and money

- Providing personalized services to citizens

- Making better decisions based on data

Key policy changes include mandating AI Impact Assessments and independent evaluations for high-risk use cases, designating AI technology executives to oversee adoption and enhancing transparency through public use case inventories, guidance around mitigating algorithmic biases, ensuring representative data, and timelines for compliance such as implementing minimum risk management practices by mid-2024.

This post breaks down FAQs to key changes across compliance timelines, evaluations, documentation, oversight, and procurement.

Q: What are the new standards for evaluations and testing?

A: The executive order imposes rigorous standards for mitigating bias, red teaming, and model performance reviews. These will influence how AI systems are tested and evaluated prior to deployment.

Q: What are the requirements for documentation and reporting?

A: The executive order calls for enhanced transparency, which includes detailed public inventories of AI use cases. This will require increased reporting on system data, capabilities, and testing procedures.

Q: How does the executive order affect ongoing oversight of AI systems?

A: Agencies will engage in regular monitoring and conduct threshold-based reviews. They will also be required to continuously mitigate emerging harms, which will influence the design and operational aspects of AI systems.

Q: How does the executive order impact the procurement processes?

A: New guidelines on responsible AI acquisition will be implemented. These will mandate new contract requirements, such as bias testing and risk management, for deliverables.

Q: What are the compliance timelines outlined in the executive order?

A: Agencies must implement new risk management practices by mid-2024. Partners should plan to meet these new assessment needs according to this timeline.

Q: What are the main goals of the proposed updates to FedRAMP?

A: The announced changes aim to help FedRAMP adapt to the growth and diversity of cloud services, scale program operations, strengthen security reviews, and accelerate agency adoption of cloud products.

Full text of the Executive Order can be found here, FedRAMP implications, and Office of Management and Budget’s guidance here.

Our team at Ventera has deep expertise honed from years of advising agencies, providing input to NIST working groups like the Generative AI governance. Ventera is ready to advise our federal customers and implement data engineering, data governance, model training, evaluation, deployment, auditing, and monitoring within this evolving regulatory environment.

At Ventera, we have extensive experience guiding federal agencies like the Centers for Medicare and Medicaid Services in developing and modernizing AI systems for predicting system uptime, making inferences transparent and compliant.

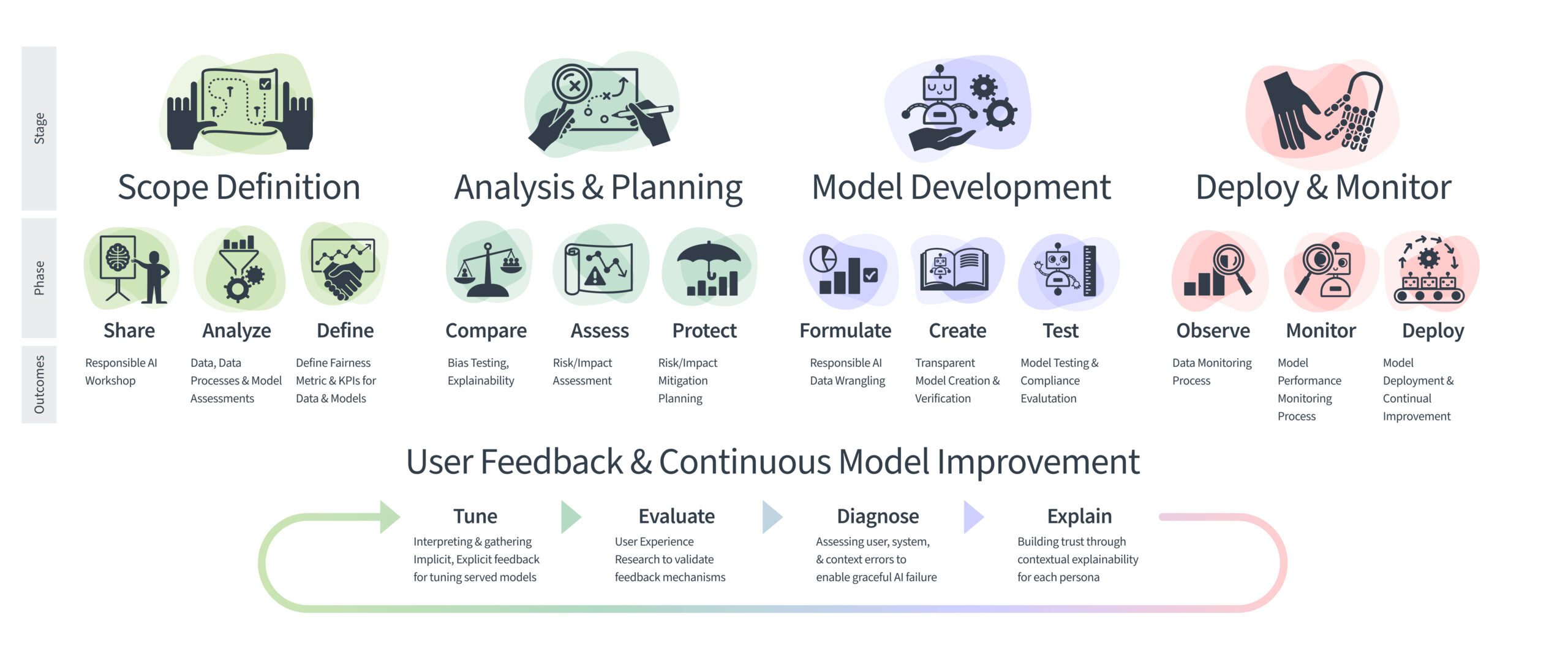

Image: Human-Centered AI at Ventera (HAiV) end-to-end Framework

We can provide end-to-end support to help your agency meet new requirements for impact assessment, data preparation and governance for model development, bias mitigation, and compliance timelines.